Artificial Intelligence (AI): Friend or Foe?

In the realm of AI’s vast benefits, there looms a shadow of vulnerability to malicious exploitation. To explore this contrast further, one must acknowledge that with great power comes great responsibility. Just as fire can be a beacon of warmth and progress or a destructive force when misused, AI possesses the same duality.

- 5 months ago

February 26, 2024

The rapid advancement of Artificial Intelligence (AI) represents a double-edged sword. It offers vast opportunities for progress across numerous fields while simultaneously raising concerns about its potential misuse. AI’s ability to learn, reason, and make decisions promises to revolutionize various fields like healthcare, transportation, education, and beyond, enhancing problem-solving and efficiency.

However, like most game-changing discoveries, there’s a looming shadow cast by the possibility of its exploitation by bad actors for malicious intents. The fear stems from scenarios where AI could be weaponized for cyberattacks, surveillance, or even autonomous weaponry, posing significant threats to privacy, security, societal stability, and existential threats.

AI reshapes sectors, offers vast benefits

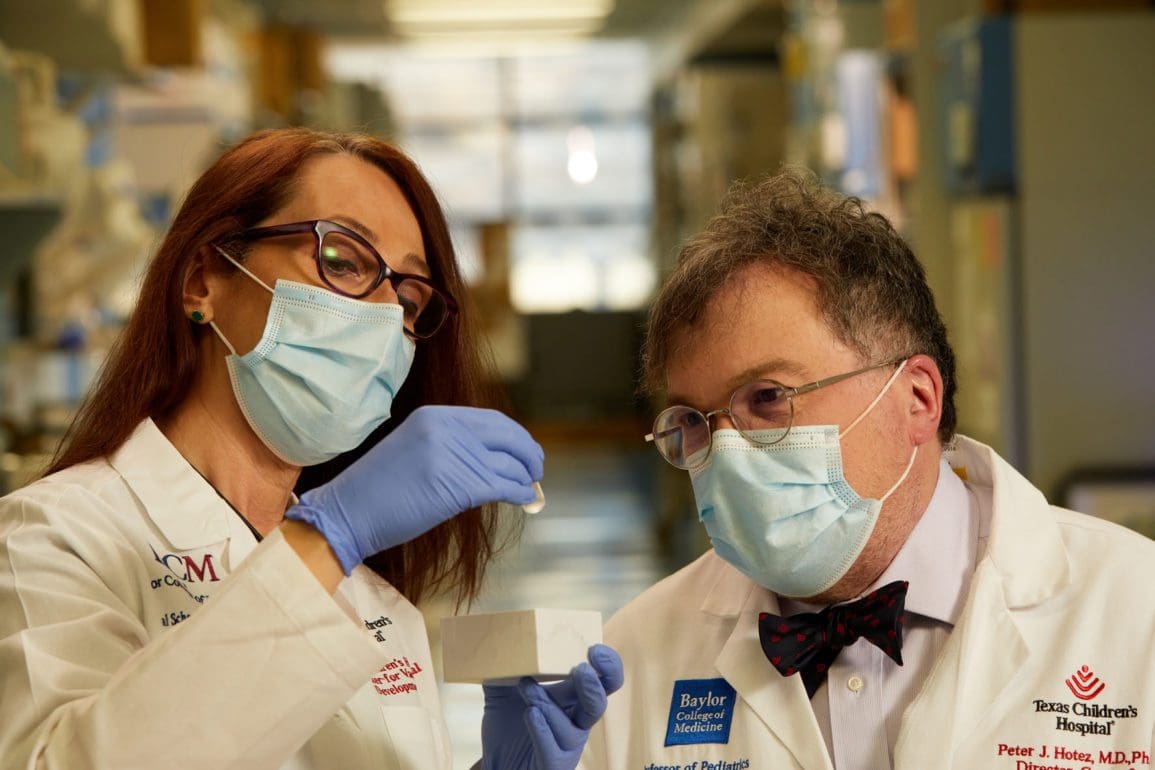

AI and machine learning were pivotal in combating COVID-19, aiding in scaling communications, tracking spread, and accelerating research and treatment efforts. For instance, Clevy.io, a French start-up and Amazon Web Services customer launched its chatbot to screen COVID-19 symptoms and offer official information to the public. Utilizing real-time data from the French government and the WHO, the chatbot managed millions of inquiries, covering topics ranging from symptoms to governmental policies.

About 83 percent of executives recognize the capability of science and technology in tackling global health issues, signaling a growing inclination towards AI-powered healthcare. In a groundbreaking development, the University College London has paved the way for brain surgery using artificial intelligence, potentially revolutionizing the field within the next two years. Recognized by the government as a significant advancement, it holds the promise of transforming healthcare in the UK.

Furthermore, in December 2023, Google introduced MedLM, a set of AI models tailored for healthcare tasks like aiding clinicians in studies and summarizing doctor-patient interactions. It’s now accessible to eligible Google Cloud users in the United States.

Artificial intelligence (AI) is also reshaping various sectors like banking, insurance, law enforcement, transportation, and education. It detects fraud, streamlines procedures, aids investigations, enables autonomous vehicles, deciphers ancient languages, and improves teaching and learning techniques. Moreover, AI supports everyday tasks, saving time and reducing mental strain.

In the realm of AI’s vast benefits, there looms a shadow of vulnerability to malicious exploitation. To explore this contrast further, one must acknowledge that with great power comes great responsibility. Just as fire can be a beacon of warmth and progress or a destructive force when misused, AI possesses the same duality.

Experts taken aback by quick progress of AI

As technology improves, it’s becoming more likely that AI will be able to do many of the tasks currently done by professionals such as lawyers, accountants, teachers, programmers, and journalists. This means that these jobs might change or even become automated in the future. According to a report by investment bank Goldman Sachs, AI could replace 300 million jobs globally and increase the annual value of goods and services by seven percent. It also suggests that up to a quarter of tasks in the US and Europe could be automated by AI. This projection underscores the transformative impact AI could have on the labor market.

Many experts have been taken aback by the quick progress of AI development. Some prominent figures, including Elon Musk and Apple co-founder Steve Wozniak were among 1,300 signatories of an open letter calling for a six-month pause on training AI systems to address the dangers arising from its rapid advancement. Furthermore, in an October report, the UK government warned that AI could aid hackers in cyberattacks and potentially assist terrorists in planning biological or chemical attacks. Artificial intelligence, warned by experts including the leaders of Open AI and Google DeepMind, could potentially result in humanity’s extinction. Dozens have supported a statement published on the webpage of the Centre for AI Safety: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

Artificial intelligence, elections, deep fakes, and AI bombs

In addition, the swift evolution of artificial intelligence may be disrupting democratic processes like elections. Generative AI, capable of creating convincing yet fake content, particularly deepfake videos, blurs the line between fact and fiction in politics. This technological capability poses significant risks to the integrity of democratic systems worldwide. Gary Marcus, a professor at New York University, warns “The biggest immediate risk is the threat to democracy…there are a lot of elections around the world in 2024, and the chance that none of them will be swung by deep fakes and things like that is almost zero.”

The CEO of OpenAI, Sam Altman, emphasized the importance of addressing “very subtle societal misalignments” that could lead AI systems to cause significant harm, rather than focusing solely on scenarios like “killer robots walking on the street.” He also referenced the International Atomic Energy Agency (IAEA) as a model for international cooperation in overseeing potentially dangerous technologies like nuclear power.

A survey from Stanford University states that more than one-third of researchers believe artificial intelligence (AI) could cause a “nuclear-level catastrophe”. This highlights the widespread concerns within the field about the dangers posed by AI technology advancing so rapidly. The results of the survey contribute to the increasing demand for regulations on artificial intelligence. These calls have been sparked by various controversies, like incidents where chatbots were linked to suicides and the creation of deepfake videos showing Ukrainian President Volodymyr Zelenskyy supposedly surrendering to Russian forces.

In “AI and the Bomb,” published this year, James Johnson of the University of Aberdeen envisions a 2025 accidental nuclear war in the East China Sea, triggered by AI-powered intelligence from both the U.S. and Chinese sides.

The proliferation of autonomous weapons

The worst that AI can do, and is already doing, includes integrating into military systems, notably in autonomous weapons, raising ethical, legal, and security concerns. These “killer robots” risk unintended consequences, loss of human control, misidentification, and targeting errors, potentially escalating conflicts.

In November 2020, the prominent Iranian nuclear scientist Mohsen Fakhrizadeh was killed in an attack involving a remote-controlled machine gun believed to be used by Israel. Reports suggest that the weapon utilized artificial intelligence to target and carry out the assassination.

The proliferation of autonomous weapons could destabilize global security dynamics and trigger arms race due to the absence of clear regulations and international norms governing their use in warfare. The lack of a shared framework poses risks, evident in conflicts like the Ukraine war and Gaza. The Ukraine frontline has witnessed a surge in unmanned aerial vehicles equipped with AI-powered targeting systems, enabling near-instantaneous destruction of military assets. In Gaza, AI reshaped warfare after Hamas disrupted Israeli surveillance. Israel responded with “the Gospel,” an AI targeting platform, increasing target strikes but raising civilian concerns.

Countries worldwide are urging urgent regulation of AI due to risks and concerns over its serious consequences. Therefore, concerted efforts are needed to establish clear guidelines and standards to ensure responsible and ethical deployment of AI systems.

Critical safety measures for artificial intelligence: we cannot solve problems in silos

In November, top AI developers, meeting at Britain’s inaugural global AI Safety Summit, pledged to collaborate with governments in testing emerging AI models before deployment to mitigate risks. Additionally, the U.S., Britain, and over a dozen other nations introduced a non-binding agreement outlining broad suggestions for AI safety, including monitoring for misuse, safeguarding data integrity, and vetting software providers.

The US plans to launch an AI safety institute to assess risks, while President Biden’s executive order requires developers of risky AI systems to share safety test results. In the EU, lawmakers ratified a provisional agreement on AI rules, paving the way for the world’s first legislation. EU countries have also endorsed the AI Act to regulate government use of AI in surveillance and AI systems.

AI regulation is crucial for ensuring the ethical and responsible development, deployment, and use of artificial intelligence technologies. Without regulation, there is a risk of AI systems being developed and utilized in ways that harm individuals, society, and the environment.

However, confronting the dark and nefarious challenges which come with AI, country by country, will be as futile as when we attempted to overcome the existential, game-changing virus COVID-19 and the accompanying global pandemic of 2020-2023. There is a plethora of global issues such as climate change, world hunger, child labour, child marriages, waste, immigration, refugee crises, and terrorism, just to name a few, which we will fail to solve if we continue trying to do this in nation-by-nation silos.

Only through collaboration and cooperation, will we stand any chance of overcoming these game-changing challenges. Likewise, only through collaboration and cooperation will we devise effective global regulations that promote trust in AI systems and foster innovation that benefits humanity, as a whole, while minimizing the risk of potential harm.